The Situation

A couple of weeks ago, a colleague and I were tasked with extracting and integrating raw Press Ganey patient satisfaction data into Epic's Caboodle/Star Data Warehouse.

After signing a Data-Use-Agreement (DUA), Press Ganey provided us credentials to an sFTP site to access XML files they deposit daily. Well daily except for weekends, which is odd, and might suggest this feature isn't automated on their end. (Gasp!)

Having already experienced difficulty sending data to Press Ganey earlier in the year during my hospital's implementation for patient satisfaction, we were not optimistic about how easy it would be to fetch data from them.

We were right.

Press Ganey's raw patient satisfaction exportable data format is not a flat file easily importable into a database. It's XML (which is not bad per se), it's not the same XML format used by CMS, and they offer ZERO documentation on usage/specs. I quote:

Press Ganey is not able to provide technical support for XML data feeds. If you have any questions about using the data file, please contact your organization's internal IT administrator.

Pretty annoying, when you consider their Core Measures reporting services require hospitals to export far more complex data, in an easily-importable, flat-file for them.

Like a snooty foodie taste-tester, who is himself, a terrible, bumbling chef in the kitchen.

The Solution

First up was automating the sFTP fetch of XML files. We did this our usual way:

- SSIS "Execute Process Task"

- WinSCP Windows FTP Client

- WinSCP script file with credentials and a bandwith-minimizing "synchronize" command to retrieve new files from a remote sFTP server.

Next we dove into the XML files to figure out how the data was represented and how to get it out. Should be straightforward enough, right?

Give us an hour, maybe two.

We totally got this.

Not so fast.

First we ran into some null-namespace issues in our .NET XML library.

And then there was the issue of parsing.If Press Ganey had provided just an XSD (XML Schema Definition) file -- the absolute, bare-minimum documentation for any respectable XML integration -- it would've been pretty easy to generate reliable C# classes for deserializing their XML data files.

But they didn't.

**DEEP BREATH**

And if Press Ganey's incoming data specs weren't so unreasonable and inconvenient, I might think their outgoing data specs/documentation were made purposefully difficult -- to lock customers in to their version of "in-app" purchases (consulting services.) But as is, I can't tell where accidental incompetence ends and willful malevolence begins.

Whew, okay, I feel better now. Where were we again? Right! Deserializing XML.

We don't need no stinkin' documentation or XSD from Press Ganey, we have Windows laptops! No, really.

There's a nifty utility (xsd.exe) available as part of the Windows SDK that you can use to:

- Generate an XSD schema definition file from an XML document -- in this case, an actual raw patient satisfaction XML data file from Press Ganey.

- Generate C# classes from said XSD schema definition file.

For me, this utility was located on my computer at:

C:\Program Files (x86)\Microsoft SDKs\Windows\v10.0A\bin\NETFX 4.6.1 Tools\xsd.exe

Exact location likely different depending on the version of windows and .NET dev tools installed.

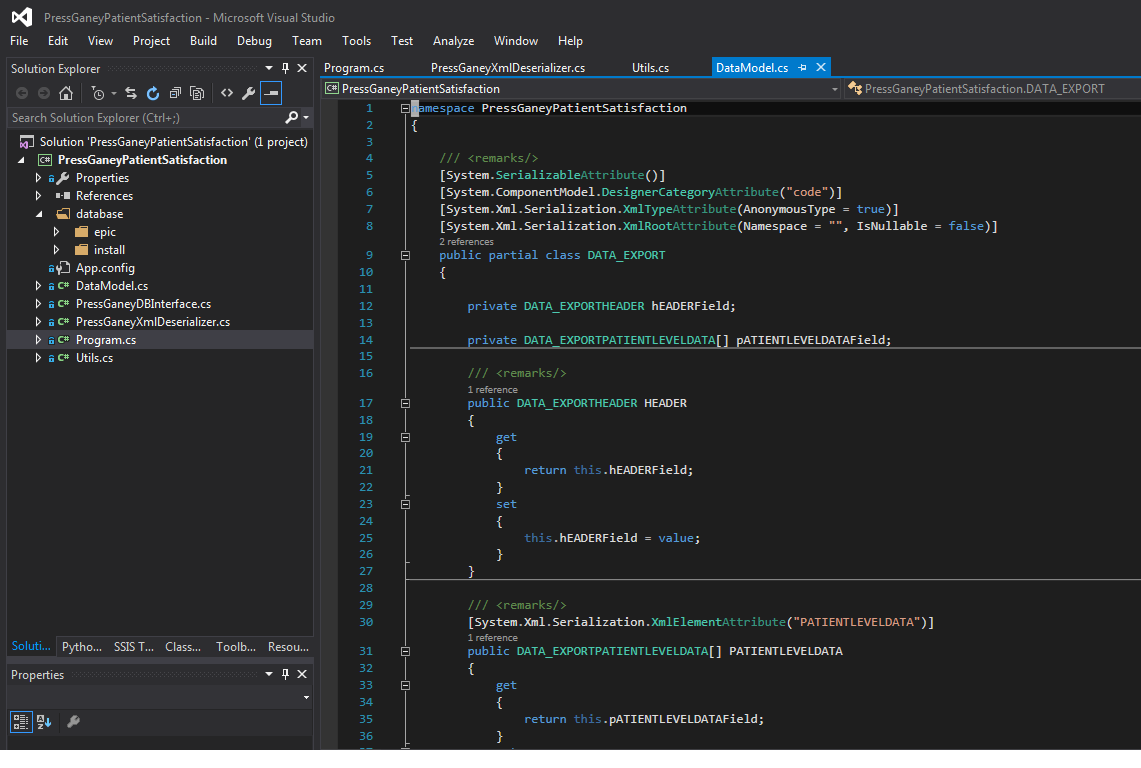

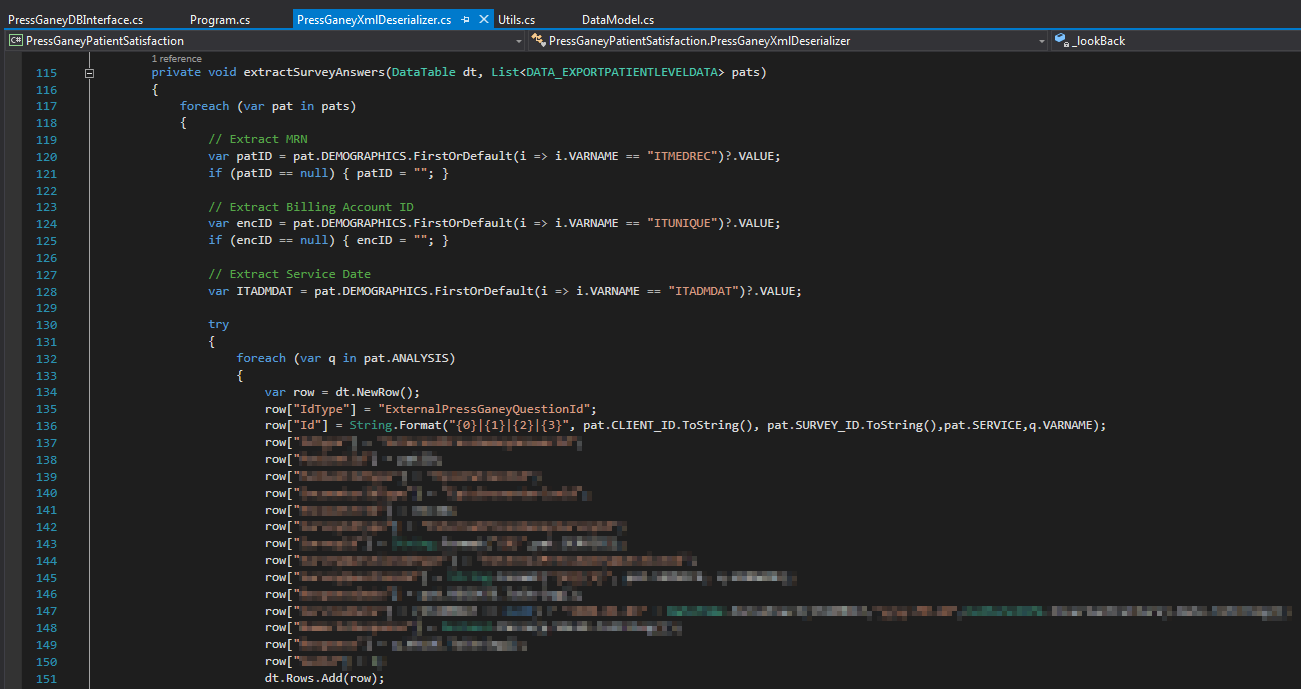

We used the xsd.exe utility to generate a XSD from a Press Ganey raw XML file, and again to generate C# classes from that XSD. With some finagling, debugging, and a little regex wizardry, we were finally able to fully deserialize the XML files into C# objects. Hooray.

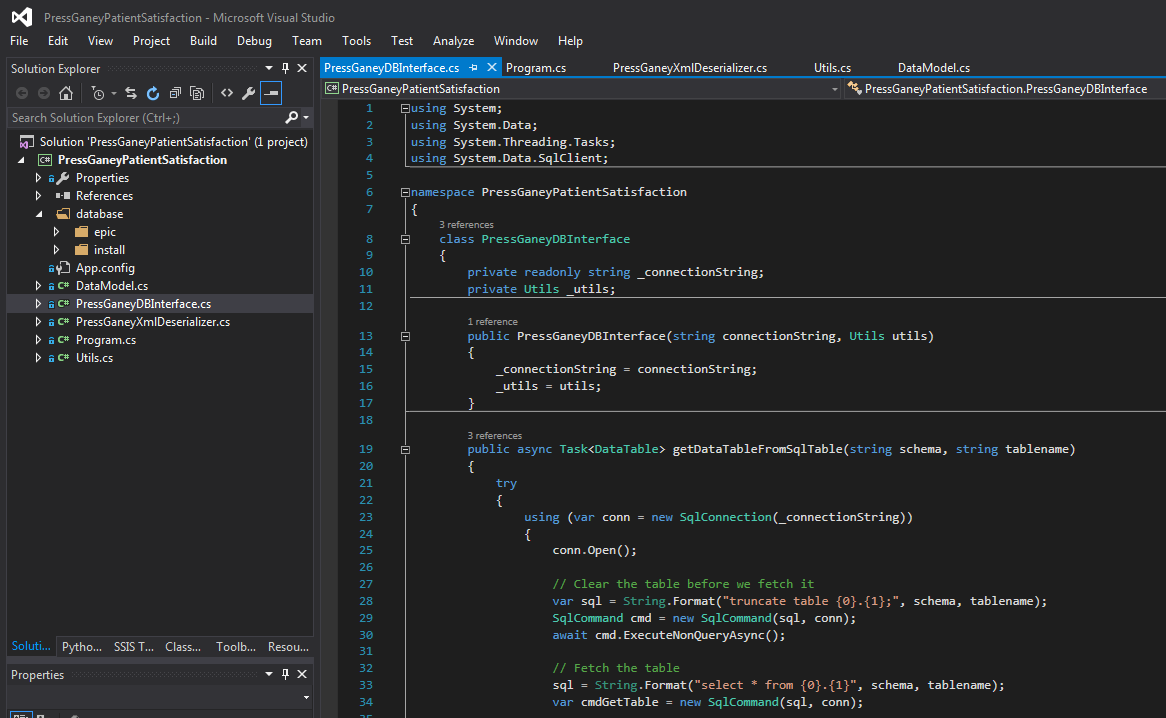

Next up was writing the data contained in these C# objects to our database.

First we started with the end result -- the transient warehouse ETL/stage tables we expected to write to. Then we had our code create in-memory DataTables that mimicked those stage tables exactly, looped through the deserialized C# objects to populate the DataTables, and then we BulkCopy-ed them to the stage tables.

From here, we added a few Data Flow components to the SSIS package that integrated the data in the stage tables with Epic's Star Data Warehouse. Specifically we performed lookups and upserted records for:

- SurveyDim

- SurveyQuestionDim

- SurveyAnswerFact

Lastly, we cleaned up the code a bit, made it install-able, created documentation and published it to it's own private repository on Github.

If you're an Epic customer and would like more info or access to this Github repository, email Yuki.